Will driverless cars ever actually be a thing?

In a somewhat what related topic France is planning to ban the sale of petroleum cars by 2040

I saw this on the BBC and thought you should see it:

France set to ban sale of petrol and diesel vehicles by 2040 - http://www.bbc.co.uk/news/worl...

weirdbeard said:

Steve said:

Would the banning of driven cars constitute a taking under the Fifth Amendment?

At the risk of repeating myself, I think it will come about not via an outright ban, but by the insurance market making it effectively cost-prohibitive to use a human-driven car, at some point in time (i.e. once the technology has been developed to the point that driverless cars are critically safer than driven ones).

I don't think that you were the only one who mentioned it.

Unless they plan to close the borders, I hope they still have gas stations.

Gilgul said:

In a somewhat what related topic France is planning to ban the sale of petroleum cars by 2040

I saw this on the BBC and thought you should see it:

France set to ban sale of petrol and diesel vehicles by 2040 - http://www.bbc.co.uk/news/worl...

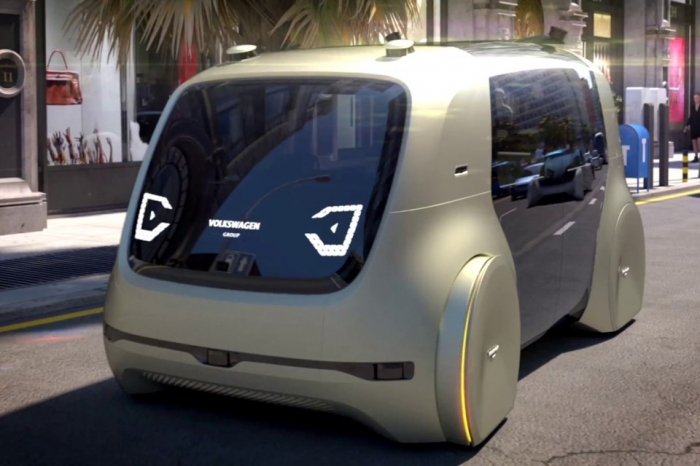

Some plans, brand by brand, from The Atlantic

To clarify a few points brought up here: Teslas have the hardware bur do not currently have the software capable of "self-driving". New Teslas sold today come with Autopilot 2 hardware, which consists of 8 cameras and numerous ultrasonic sensors, a radar, and a powerful graphics processing computer onboard. They claim that this will be enough hardware to enable self-driving when the machine-learning self-driving software has advanced, and regulatory and legal aspects have been addressed, within the next few years. All Teslas send their real-world data back up to the cloud every day, so the machine-learning aspect can allow for exponentially-fast learning as more Teslas come on the road.

The software currently available is called Enhanced Autopilot, which means lane-keeping and speed-keeping on a highway; not more. I've driven on road trips with it and it's great, it frees the driver from keeping the lane so that you can just kind of supervise and scan the horizon for any oncoming situations where you would want to take over. It's not useful or safe for local roads as it doesn't respond to traffic signs and lights. I turn it off if there are construction zones, narrow lanes, badly painted lines, erratic drivers, etc. Anything abnormal. It excels in mindless stop-and-go traffic, as well as long-haul interstate driving. The edge-case situations are definitely the challenge for self-driving, but they are not insurmountable by any means.

Gilgul said:

Perception is part of the issue. I had read in the Economist a while ago (but am too lazy to search for the link) that for some time now it has been technically possible to have a fully automated passenger airplane. In many ways it is an easier task than driving since the path is, except if something goes wrong, cleared for the plane. Much of a flight is now on autopilot anyway. There is a big shortage of trained pilots. But how many people would be willing to get on a plane that will be flown without a trained pilot on board?

One huge advantage to having a human pilot in the front of the plane is that the pilot will die if the plane crashes, so the pilot has a big incentive to prevent a crash. A person piloting by remote control on the ground has a lot less of an incentive. I expect this will keep pilots in planes for a long time, if society has any sense left.

U think he meant truly automated, not remotely piloted. I suppose there could be human backup on the ground if odd flight patterns are detected.

We, and every generation that came before us, look at new things as crazy, impossible, scary but we eventaully get so used to them that they become background noise. I have no doubt driverless cars will come into wide use.

Tom_Reingold said:

Gilgul said:

Perception is part of the issue. I had read in the Economist a while ago (but am too lazy to search for the link) that for some time now it has been technically possible to have a fully automated passenger airplane. In many ways it is an easier task than driving since the path is, except if something goes wrong, cleared for the plane. Much of a flight is now on autopilot anyway. There is a big shortage of trained pilots. But how many people would be willing to get on a plane that will be flown without a trained pilot on board?

One huge advantage to having a human pilot in the front of the plane is that the pilot will die if the plane crashes, so the pilot has a big incentive to prevent a crash. A person piloting by remote control on the ground has a lot less of an incentive. I expect this will keep pilots in planes for a long time, if society has any sense left.

martini said:

To clarify a few points brought up here: Teslas have the hardware bur do not currently have the software capable of "self-driving". New Teslas sold today come with Autopilot 2 hardware, which consists of 8 cameras and numerous ultrasonic sensors, a radar, and a powerful graphics processing computer onboard. They claim that this will be enough hardware to enable self-driving when the machine-learning self-driving software has advanced, and regulatory and legal aspects have been addressed, within the next few years. All Teslas send their real-world data back up to the cloud every day, so the machine-learning aspect can allow for exponentially-fast learning as more Teslas come on the road.

The software currently available is called Enhanced Autopilot, which means lane-keeping and speed-keeping on a highway; not more. I've driven on road trips with it and it's great, it frees the driver from keeping the lane so that you can just kind of supervise and scan the horizon for any oncoming situations where you would want to take over. It's not useful or safe for local roads as it doesn't respond to traffic signs and lights. I turn it off if there are construction zones, narrow lanes, badly painted lines, erratic drivers, etc. Anything abnormal. It excels in mindless stop-and-go traffic, as well as long-haul interstate driving. The edge-case situations are definitely the challenge for self-driving, but they are not insurmountable by any means.

Here's the thing... how does it know when to cede control to a driver in a situation? IOW, at what point does it (or will it) need to alert drivers that the conditions will need a corrrection or decision?

I think that's the biggest hurdle to full autonomy-- one simply cannot fall asleep and wake up someplace, as there needs to be a window when an unpredicted (and not everything can be predicted) situation arises or one that requires the choice of the lesser of two evils so to speak (slam the car ahead or swerve off-road and hit the ditch, for example).

I know gilgul meant fully automated, but I thought I would throw in the possibility of remote control as an intermediate contrast.

I'm on the side that sees driverless cars as inevitable. At a certain point, they will be safer than human-driven cars. When they start getting into collisions, people will say what a horrible idea they are, even in the face of improved safety overall. I do understand that we see things with emotions and put rational thinking aside, but don't we WANT to decide these things rationally? We take a risk knowingly whenever we get into a car. (RIGHT?) Why can't we take that risk in an automated car if we know it's better than the risk of trusting a driver? Sure, it may be hard to face, but isn't it rational to TRY to trust whichever is better? It's not just rational, it's the better choice for life and wellbeing.

On the subject of trusting new tech, for a long time I would occasionally veto Google maps when its driving directions seemed counter intuitive or contrary to my "manly" knowledge of short cuts and alternative routes around traffic problems in the NYC area. But you know what? The disembodied lady voice is always right. I've swallowed my pride and no longer second guess. She knows. She has real time traffic info. She is in cahoots with the satellite. She has complex algorithms that I lack. I surrender.

Tom_Reingold said:

I know gilgul meant fully automated, but I thought I would throw in the possibility of remote control as an intermediate contrast.

I'm on the side that sees driverless cars as inevitable. At a certain point, they will be safer than human-driven cars. When they start getting into collisions, people will say what a horrible idea they are, even in the face of improved safety overall. I do understand that we see things with emotions and put rational thinking aside, but don't we WANT to decide these things rationally? We take a risk knowingly whenever we get into a car. (RIGHT?) Why can't we take that risk in an automated car if we know it's better than the risk of trusting a driver? Sure, it may be hard to face, but isn't it rational to TRY to trust whichever is better? It's not just rational, it's the better choice for life and wellbeing.

Spoiler alert ----

In the movie Sully the dramatic arc revolves around reenactment scenarios showing that the plan could have made it to Teterboro. In his big dramatic monologue Tom Hanks demonstrates that those scenarios were based on instant action and practice and that in real life no pilot was trained for that situation and that it inevitably took time to realize what had happened and react and that if that was factored in (and test scenarios later showed) the plane would have crashed before reaching Teterboro.

I bring this up because it certainly possible that an automated plane could have reacted faster than even the best pilot.

I'm the same way. Google maps has knowledge of traffic conditions and offers the latest, greatest routes, almost all the time. Nowadays, I ask her how to go even when I know the way, even when she may not even find a better way. The advantage is that the next turn is in front of me, and I can drive absent-mindedly and miss turns. When I look up, all I need to see is the next turn. There are cases when I can choose a better route, but they are becoming increasingly rare. I turn the audio off and just look at the screen. I have it mounted to the side of the steering wheel on top of the dashboard so I am not diverting my eyes from the road.

By the way, google maps is developed and improved in Chelsea, NYC, not on the west coast. I know some people there.

When Skynet becomes self-aware, "she" is going to have all of us drive 60 mph into walls so we shouldn't become 100% lax. Until then, its great.

Tom_Reingold said:

I'm the same way. Google maps has knowledge of traffic conditions and offers the latest, greatest routes, almost all the time. Nowadays, I ask her how to go even when I know the way, even when she may not even find a better way. The advantage is that the next turn is in front of me, and I can drive absent-mindedly and miss turns. When I look up, all I need to see is the next turn. There are cases when I can choose a better route, but they are becoming increasingly rare. I turn the audio off and just look at the screen. I have it mounted to the side of the steering wheel on top of the dashboard so I am not diverting my eyes from the road.

By the way, google maps is developed and improved in Chelsea, NYC, not on the west coast. I know some people there.

Yes that is the biggest hurdle. One solution is to cede some control to a remote operator as they do military drones - however that solution would require network connectivity and latency which would not work in all cases. In addition the car can try to rouse the passenger for assistance. In any case the car's immediate job would be to try to remove the car from immediate danger, ideally by pulling over at a safe location.

In most of these hypothetical scenarios, all we can ask is "what would a human do" and try to replicate how a human can decide to do that with machine learning. We manage to do a lot with two eyes. The computer has much more sensor data than that to work with, but lacks the image processing capabalities of our brains.

This fall Tesla is promising a fully autonomous drive from LA to NYC, with no controls touched, on a non pre-determined route, so we will all see soon enough.

ctrzaska said:

Here's the thing... how does it know when to cede control to a driver in a situation? IOW, at what point does it (or will it) need to alert drivers that the conditions will need a corrrection or decision?

I think that's the biggest hurdle to full autonomy-- one simply cannot fall asleep and wake up someplace, as there needs to be a window when an unpredicted (and not everything can be predicted) situation arises or one that requires the choice of the lesser of two evils so to speak (slam the car ahead or swerve off-road and hit the ditch, for example).

dave said:

Singapore is planning a 100% driverless taxi fleet by 2018. First ones are already on the roads. Not sure why these would be decades away for the US.

Dave - how did this turn out? (I honestly don't know.)

Also, what might be the biggest impediment to consumer acceptance of driverless cars is the fact that they don't speed.

Imagine having to actually drive 25mph for any extended period, or bumbling around on the highway at 55. (yeah, yeah, when all cars are driverless the speed limit will be 100mph.)

Two thoughts on the speeding thing:

Number one... if you can work on your laptop or watch TV on your tablet (or entertainment system built into your autonomous vehicle) will you care so much if your drive takes a little longer?

Also, some municipalities seem to take in a lot of revenue from traffic tickets. What happens when more and more cars are unable to speed? Or park illegally or run a traffic signal or hell, won't run if a turn signal lamp is busted.

Ok, three thoughts on the speeding thing.

Current speed limits are written because of human drivers and how long it takes to react to certain things. Autonomous vehicles with sensors that can detect other cars could probably safely drive much faster.

If all cars were driven without people every merge and exit would be smoother. Cars would maintain a speed with little or no braking. Even if they drove a little slower the trip would be much faster. (Assuming ideal conditions).

The Boeing aircraft issues makes me question automation.

How would anyone like to be in a car where it rapidly brakes on a highway because of a defective sensor or software? With the car manufacturer telling you don't worry it rarely happens and besides we're working on it just follow some "manual intervention steps" to override the braking? Considering you may have two or three seconds to prevent a rear ending. Or a sudden acceleration issue.

Or to have something on the road causing your automatic car to swerve into incoming traffic:

Researchers have been able to fool Tesla's autopilot in a variety of ways, including convincing it to drive into oncoming traffic. It requires the placement of stickers on the road.

1. We proved that we can remotely gain the root privilege of APE and control the steering system.

2. We proved that we can disturb the autowipers function by using adversarial examples in the physical world.

3. We proved that we can mislead the Tesla car into the reverse lane with minor changes on the road.

https://keenlab.tencent.com/en/whitepapers/Experimental_Security_Research_of_Tesla_Autopilot.pdf

I'm sure if there is robust oversight by well-funded and competently staffed government agencies the problems will be minimal.

I also have issues with it, but I think it will iron itself out. If it were widespread and there were a few accidents it would probably still be a zillion times safer than us drivers. What I do wonder about is the transition. How does my 35 year old truck fit in to the matrix?

One problem will be perception. Every accident involving an autonomous vehicle will be the subject of scrutiny and national media sensationalism.

Whereas the thousands of traffic deaths that occur now don't often merit much scrutiny. Let alone injuries and traffic problems caused by less severe accidents. (I know traffic seems petty compared to death or injury but 90 minutes jams on a major highway have a cost as well.)

drummerboy said:

dave said:Dave - how did this turn out? (I honestly don't know.)

Singapore is planning a 100% driverless taxi fleet by 2018. First ones are already on the roads. Not sure why these would be decades away for the US.

Also, what might be the biggest impediment to consumer acceptance of driverless cars is the fact that they don't speed.

Imagine having to actually drive 25mph for any extended period, or bumbling around on the highway at 55. (yeah, yeah, when all cars are driverless the speed limit will be 100mph.)

Looks like a few years away, but there has been progress.

I use self-driving cars every day, but some people call them taxis.

By the way, car accidents are not "accidents". They are mistakes (99 times out of a 100). Use "crash" instead.

Thank you.

An accident is a mistake. Unless a driver intentionally causes the incident, "accident" is accurate in describing one auto hitting another.

drummerboy said:

By the way, car accidents are not "accidents". They are mistakes (99 times out of a 100). Use "crash" instead.

Thank you.

If my auto insurance was reduced by 95% then I'd be all for driverless vehicles.

apple44 said:

An accident is a mistake. Unless a driver intentionally causes the incident, "accident" is accurate in describing one auto hitting another.

drummerboy said:

By the way, car accidents are not "accidents". They are mistakes (99 times out of a 100). Use "crash" instead.

Thank you.

I would submit that the common perception of the word accident is more along these lines:

"an event that happens by chance or that is without apparent or deliberate cause."

When you use the word accident in that way, any notion of fault goes away.

Rentals

Sponsored Business

Promote your business here - Businesses get highlighted throughout the site and you can add a deal.

then call it a self-operating car. With a technician riding along as a backup.